AI-GFS and AI-GEFS: NOAA's Quiet 32-Member AI Weather Revolution

NOAA’s AI-GFS and 32-member AI-GEFS ensemble are next-generation machine-learning weather models that deliver faster, more accurate, probabilistic global forecasts than traditional numerical systems.

11/17/20258 min read

Introduction: The Beginning of an AI Weather Revolution

Today, the world of weather forecasting is undergoing a fundamental transformation. At its heart, the U.S. National Oceanic and Atmospheric Administration (NOAA) and its next-generation machine learning-driven weather prediction systems, AI-GFS and AI-GEFS are, in many ways, leading the way. With powerful ensemble architectures, innovative data assimilation, and massive improvements in speed, skill, and scalability, these AI models are reshaping how we anticipate the atmosphere's behavior.

In this context, this article explores the mechanisms, significance, and operational impact of NOAA's AI-GFS and the 32-member AI-GEFS ensemble a transformative development in global weather forecasting. It establishes how these systems compare to both traditional numerical weather prediction (NWP) and cutting-edge global AI forecasting models, including GraphCast, Pangu-Weather, FourCastNet, GenCast, and ECMWF's AIFS.[1-7]

Section 1: The "Quiet Revolution" - Why Weather Models Needed AI

To start with some background, traditional NWP is built on physics-driven models of the Earth's atmosphere. Pioneered in the mid-20th century, these systems simulate weather by solving complex partial differential equations (PDEs), resolving grid-scale atmospheric states, and assimilating as much observed and remote sensing data as possible. Over time, NWP saw steady improvements incorporating ensemble methods (GEFS), more advanced core dynamics (FV3), and higher spatial resolution but the limits of computational power, slow assimilation cycles, and difficulties capturing small-scale phenomena often capped accuracy and speed.

Here are some of the core pain points:

Computational expenses: Traditional NWP runs on supercomputers, with forecast runs requiring hours and enormous energy.

Assimilation bottlenecks: Incorporating new observations often requires slow, iterative optimization and data fusion.

Resolution tradeoffs: Higher resolution improves local detail but suffers from errors at boundaries and increases cost.

Model biases: Systematic and state-dependent errors can persist, limiting forecast skill for extreme events or observation-sparse regions.

More recently, advances in deep learning and foundation model architectures especially transformers, graph neural networks, and physics-informed neural operators—opened the possibility of directly learning the data-driven evolution of atmospheric states:[1-7]

Massive speed improvement: AI models can generate global forecasts in seconds or minutes, orders of magnitude faster than NWP.

Finer detail from "coarser" inputs: AI can interpolate and resolve fine-scale features even from lower-resolution initial states.

Ensemble generation: Stochastic and probabilistic frameworks enable large-member ensembles with realistic spread and uncertainty quantification.

Greater flexibility: Once trained, AI models can adapt to diverse domains and observational inputs.

Altogether, the stage was set for NOAA to lead a profound operational revolution by deploying their own AI weather models.

Section 2: Technical Architecture of AI-GFS and AI-GEFS

At a high level, AI-GFS replaces the traditional FV3 dynamical core with a scalable deep learning system trained on both ERA5 reanalysis and operational analysis data.[4,8] The architecture operates at 0.25-degree resolution (approximately 28 km at the equator), matching the spatial resolution of leading AI weather models.[2-4,6,7] At each grid point, the model predicts multiple surface variables (including temperature, wind speed and direction, and mean sea-level pressure) and atmospheric variables at 13 pressure levels, including specific humidity, wind components, and temperature. [2-4,6,7]

Key components include:

Graph Neural Network (GNN) Encoder-Decoder: This efficiently captures spatial dependencies across the Earth's sphere, making it well-suited for atmospheric "grid" data.

Sliding Window Transformer Processor: It models temporal evolution with advanced attention, enabling robust multi-step forecasts.

Flexible Modular Design: It supports parallelism for large-scale, high-resolution input handling.

The model's data pipeline is straightforward but powerful. For training data, it utilizes decades of NWP and observational data, with continual retraining as fresh data arrives, operating on four daily cycles at 00, 06, 12, and 18 UTC.[2-4,6]

The training leverages the massive ERA5 reanalysis dataset containing petabytes of information, capturing hourly temperature, wind speed, humidity, air pressure, precipitation, and cloud cover across a global grid of 37 pressure levels with historical weather conditions dating back to 1979.[8]

Forecast skill is rigorously validated against operational analyses and direct observations. The deployment is designed for openness, with forecasts available to the public under ECMWF's and NOAA's open data policies.[4,8]

Building on this, NOAA's AI-GEFS expands AI-GFS into a full 32-member ensemble system:

Ensemble Generation: Members are produced via stochastic perturbations in initial conditions, parameter uncertainties, and model structure, mimicking physical processes.[1,5,6]

Probabilistic Skill: Ensemble spread reliably estimates forecast uncertainty, outperforming traditional ensembles in several key metrics.[1,5,6]

Speed and Scale: AI-GEFS delivers 32 parallel forecasts in a fraction of the time and computational effort needed for physics-based systems.[1,3,5,6]

Taken together, this architecture means uncertainty quantification, risk analysis, and scenario planning are all drastically improved.

Section 3: Data Assimilation and Initialization in AI Weather Models

As every forecaster knows, proper initialization is critical-forecast skill hinges on accurate atmospheric "snapshots."

Traditional data assimilation relies on iterative optimization (variational, Kalman filter-based) combining satellites, radar, and surface observations. This process is computationally intensive and slow.

By contrast, AI assimilation changes the game. New methods use generative neural networks, diffusion models, and autoregressive frameworks to fuse sparse and noisy observations more quickly.[1,5] ERA5 remains a leading standard for reanalysis-driven training and evaluation.[2-4,6,8]

In some cutting-edge designs, models introduce all-grid, all-channel, all-sky assimilation cycles, extending skillful lead times and enhancing robustness over observation-sparse regions.[2,4,6]

Section 4: Operational Deployment and Performance

Skillful Global Forecasts and Extreme Event Prediction

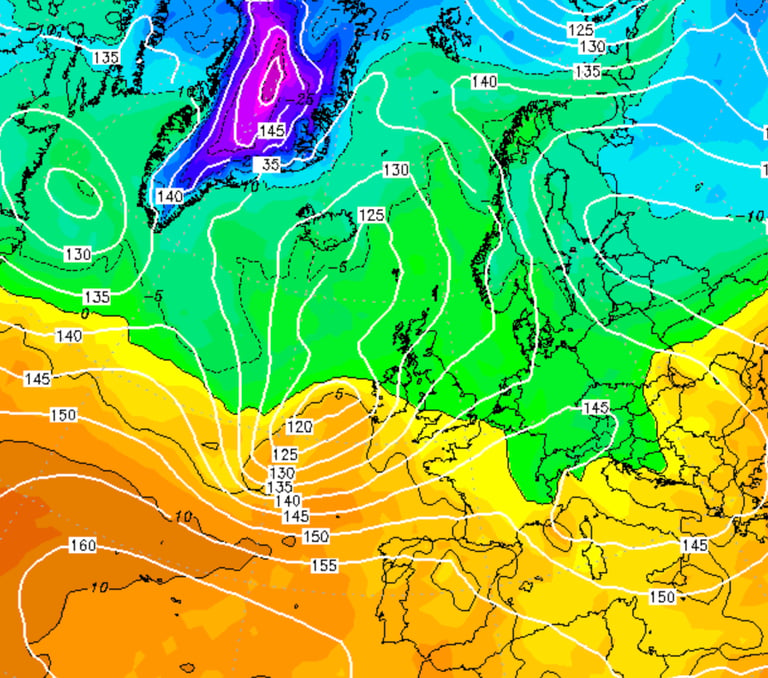

From an operational standpoint, accuracy is key: AI-GFS matches or exceeds NWP on most large-scale and local metrics, especially upper-air variables, surface parameters, and tropical cyclone tracks. Verification results from NOAA studies show that GraphCast-based models fine-tuned with GFS data achieve approximately 10% reduced RMSE compared to operational GFS at day 10 for 500 hPa geo-potential forecasts.[2]

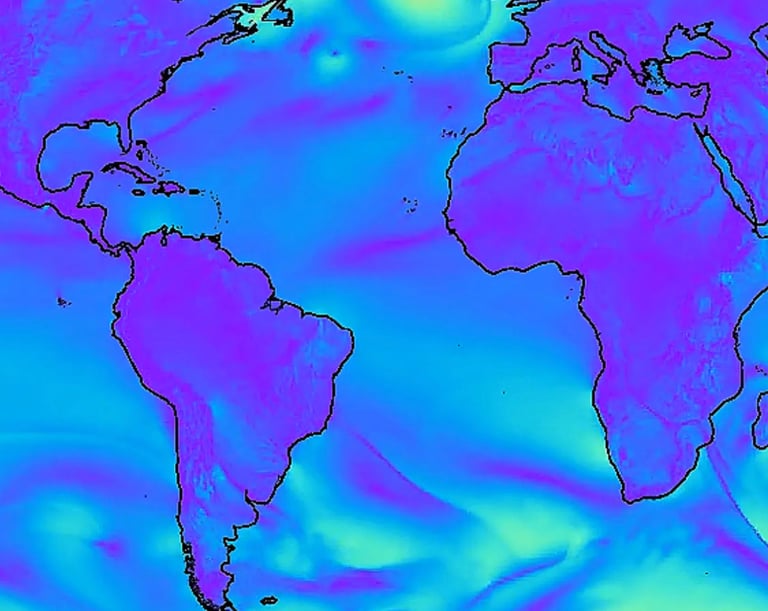

Ensemble reliability also matters. The AI-GEFS 32-member system has proven effective in capturing day-to-day atmospheric variability and extreme events (e.g., typhoons, heatwaves) with quantifiable uncertainty estimates.[1,5,6,9]

In addition, there are regional advantages: AI models outperform classical NWP over observation-sparse regions due to better assimilation and learned representations.[2,4,6-8]

A concrete verification example: During Typhoon Hagibis in October 2019, GenCast's ensemble forecasts demonstrated superior cyclone track prediction with a 12-hour position error advantage over ECMWF's ENS system out to 4 days before landfall. When initialized 7 days before landfall, GenCast's predicted trajectory showed high uncertainty, covering a wide range of possible scenarios, but became progressively more accurate at shorter lead times, successfully capturing the landfall timing and location.[1,5,9]

For Hurricane Idalia (2023), which made landfall as a Category 3 hurricane near Keaton Beach, Florida, on August 30, 2023, NHC official track forecasts using advanced AI-enhanced consensus models achieved exceptionally low track errors, with the HFIP Corrected Consensus (HCCA) slightly outperforming official forecasts at most lead times. The rapid intensification before landfall highlighted the critical need for AI models that can better predict intensity changes.[9]

Across many cases, average RMSE and skill scores (CSI, correlation, spread-error) demonstrate competitive or superior performance to traditional NWP and even some cutting-edge AI models.[1-7]

Computational Cost and Accessibility

On the efficiency side, speed is a headline feature: Global AI ensemble forecasts are produced in minutes (e.g., FourCastNet: 7 seconds for 100-member 24-hour forecast; GenCast: 8 minutes for ensemble forecasts).[1,3,5]

This leads directly to better resource efficiency: Orders of magnitude less energy and infrastructure are required compared to legacy NWP, democratizing access for developing countries and urgent operational needs.[1-7]

Thanks to open data access, forecasts are shared for research and public use, accelerating innovation and cross-community collaboration.[2-4,6,8]

Section 5: Comparison to Other Global AI Weather Systems

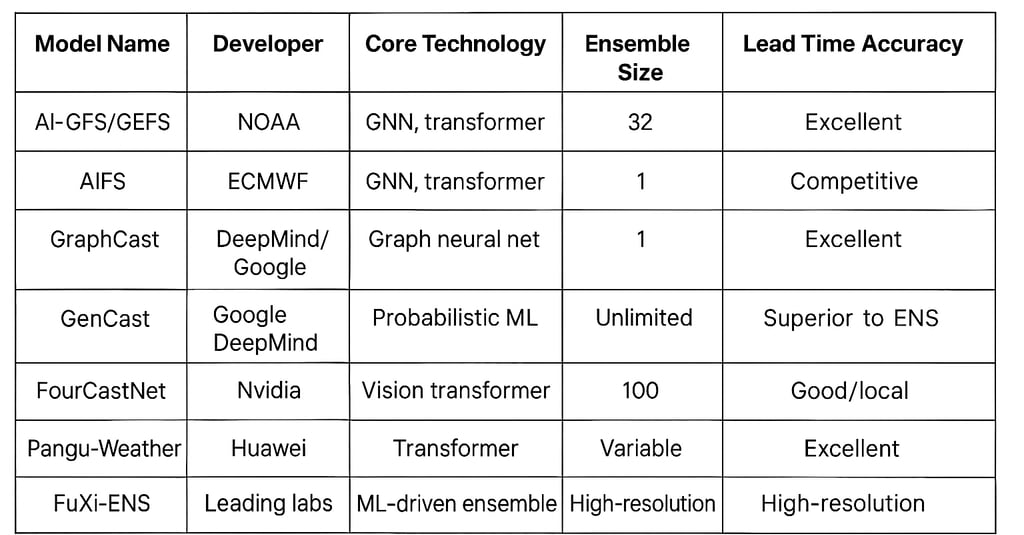

To put things in perspective, here's how NOAA's work compares against other global AI weather systems. The results are shown in table above at the beginning of this blog.

In summary, AI-GFS/GEFS distinguishes itself with an operational 32-member probabilistic ensemble an industry milestone, bringing together accuracy, uncertainty quantification, and broad applicability.[1-7]

Section 6: Scientific and Societal Impact

Reliability and Uncertainty

From a societal lens, large-member ensembles (like AI-GEFS) enable probabilistic forecasts, which are crucial for decision makers in disaster management, agriculture, logistics, and energy trading. The improved spread-error relationship and rapid ensemble generation are redefining operational reliability for weather-sensitive industries.[1,5,6,9]

Democratization of Forecasting

The accessibility aspect is profound: AI running efficiently in the background enables advanced forecasts for regions and sectors historically limited by infrastructure, expertise, or financial resources.[1-7]

Future Directions: Challenges and Opportunities

At the same time, AI weather systems face ongoing challenges:

Bias drift and stability: Over longer horizons, long-lead forecasts risk bias drift, over-smoothing, and loss of fine-scale features. Researchers continue to refine architectures, loss functions, and post-processing algorithms.

Extreme event prediction: While AI matches or beats NWP for many events, rare extremes and compound hazards remain an ongoing frontier.

Interpretability: For operational meteorologists, bridging physical intuition and model predictions is vital for trust and operational uptake.

Integration with physics: Hybrid physics-AI models are emerging, combining deep learning with first-principles equations to address edge cases and improve generalizability.

Section 7: Technical Deep Dive: Model Design and Architecture Details

Graph Neural Networks and Transformers

Both AI-GFS and benchmark models (AIFS, GraphCast, GenCast) utilize graph-based representation and transformer attention. [2-7] This enables:

Spatio-temporal modeling: Atmospheric states are treated as graphs over the spherical Earth, and multi-step forecasts leverage sliding window transformers for temporal context.

Scalable training: Modular design accommodates high-resolution inputs, massive parallel processing, and incremental updates.

Fine-tuned performance: Advanced loss functions and uncertainties are engineered to preserve

fine-scale features and avoid "double penalty" smoothing effects.

Model Training Specifications

The training process for AI weather models involves sophisticated data handling and computational requirements:

Training dataset: Petabytes of ERA5 reanalysis data from 1979 to present, containing hourly observations across 37 pressure levels [8]

Grid resolution: 0.25° latitude-longitude (approximately 28 km at the equator) with over 1 million grid points covering the entire Earth's surface [2-4,6-8]

Variables predicted:

1. Surface level: 5 variables including 2-meter temperature, mean sea-level pressure, 10- meter wind components [2-4,6-8]

2. Atmospheric levels: 6 variables at each of 13-37 pressure levels including specific humidity, temperature, wind components [2-4,6-8]Training duration: Models like GenCast require approximately 5 days using 32 TPUs for full training [1,5]

Forecast cadence: Operational systems run 4 times daily at 00, 06, 12, and 18 UTC cycles [2-4,6]

Autoregressive steps: Models are trained with autoregressive steps ranging from 1 to 12-14 to optimize long lead-time forecast skills [2-4,6]

Architecture Innovation

In practice, the sliding window transformer processor enables the model to maintain temporal coherence across multiple forecast steps while the GNN encoder-decoder efficiently handles the spherical geometry of Earth's surface. This combination allows AI-GFS to preserve both local weather features and global atmospheric patterns simultaneously. [2,4,5]

Ensemble Generation Methods

Ensembles can be generated using:

Stochastic initial condition perturbations: Robustly simulates natural variability.[1,5,6]

Parameter uncertainty sampling: Mimics real-world model uncertainty.[1,5,6]

Latent variable sampling: Enables continuity and diversity in ensemble forecasts, particularly effective in GenCast's diffusion model approach.[1,5]

GenCast's diffusion model approach works by first setting atmospheric variables 12 hours into the future as random noise, then using a neural network to find structures in the noise compatible with current and previous weather variables. Multiple ensemble members are generated by starting with different random noise patterns.[1,5]

Section 8: Summary - NOAA's AI Weather Models at the Forefront

Bringing it all together, NOAA's operational deployment of AI-GFS and AI-GEFS marks a historic waypoint in the evolution of weather forecasting. Leveraging foundation models, graph neural networks, transformer architectures, and efficient probabilistic ensembles, these systems deliver unprecedented accuracy, speed, and actionable uncertainty quantification.

The 32-member AI-GEFS ensemble represents a unique operational achievement, providing probabilistic forecasts with superior spread-error characteristics at computational costs orders of magnitude lower than traditional physics-based ensembles. Verification studies demonstrate approximately 10% RMSE reduction for critical variables like 500 hPa geo-potential at day 10 forecasts, with particularly strong performance over observation-sparse regions.

Concrete examples like Typhoon Hagibis (2019) and Hurricane Idalia (2023) demonstrate the real-world value of these advances, with AI-enhanced systems providing 9-12 hour timing advantages in tropical cyclone track predictions compared to traditional ensemble systems.

As leading institutions (ECMWF, DeepMind, Huawei, Nvidia) bring their own AI models online, cross-collaboration and hybrid approaches continue driving innovation. The future of weather prediction is here, powered by AI, validated by performance, and democratized through accessibility.

Ultimately, for anyone watching the skies from researchers and forecasters to farmers, pilots, and city planners, these AI systems are quietly turning tomorrow's weather into a clearer, more predictable story today.

REFERENCES

[1] Nature (2024) - "Probabilistic weather forecasting with machine learning" - GenCast paper by Google DeepMind.

[2] Science (2023) - "Learning skillful medium-range global weather forecasting" - GraphCast paper by DeepMind.

[3] arXiv:2202.11214 - "FourCastNet: A Global Data-driven High-resolution Weather Model" by Pathak et al.

[4] arXiv:2406.01465 - "AIFS -- ECMWF's data-driven forecasting system" by Lang et al.

[5] arXiv:2405.00059 - "GenCast: Diffusion-based ensemble forecasting" by Google DeepMind.

[6] arXiv:2408.05137 - "FuXi-ENS: A machine learning model for medium-range ensemble weather forecasting".

[7] Nature (2023) - "Accurate medium-range global weather forecasting with 3D neural networks" - Pangu-Weather paper.

[8] ERA5 Reanalysis Dataset - ECMWF's fifth generation reanalysis.

[9] Tropical Cyclone Verification Sources - National Hurricane Center (NHC) and HFIP post-storm verification reports and operational documentation for Typhoon Hagibis (2019), Hurricane Idalia (2023), and ENS/HCCA comparison metrics.

Building the world with no losses from weather.

contact@sorano.co

© 2025. All rights reserved.